프로젝트 개요3 | DeepSeek Shared User Data With Chinese Company ByteDance

페이지 정보

작성자 Rocky 작성일25-02-28 10:04 조회27회 댓글0건본문

He co-based High-Flyer in 2016, which later turned the only real backer of DeepSeek. In February 2016, High-Flyer was co-founded by AI enthusiast Liang Wenfeng, who had been buying and selling because the 2007-2008 monetary disaster while attending Zhejiang University. While now we have seen attempts to introduce new architectures corresponding to Mamba and extra just lately xLSTM to simply title a number of, it seems likely that the decoder-only transformer is here to remain - a minimum of for probably the most half. Distilled Models: Smaller, effective-tuned versions based on Qwen and Llama architectures. DeepSeek-R1 achieves state-of-the-artwork ends in numerous benchmarks and affords both its base fashions and distilled variations for community use. Comprehensive evaluations reveal that DeepSeek-V3 outperforms different open-supply fashions and achieves performance comparable to main closed-supply fashions. DeepSeek-V3 achieves the perfect performance on most benchmarks, especially on math and code tasks. Huawei Ascend NPU: Supports working DeepSeek-V3 on Huawei Ascend units. DeepSeek-V3 collection (together with Base and Chat) supports commercial use. The DeepSeek Chat V3 model has a prime rating on aider’s code modifying benchmark.

He co-based High-Flyer in 2016, which later turned the only real backer of DeepSeek. In February 2016, High-Flyer was co-founded by AI enthusiast Liang Wenfeng, who had been buying and selling because the 2007-2008 monetary disaster while attending Zhejiang University. While now we have seen attempts to introduce new architectures corresponding to Mamba and extra just lately xLSTM to simply title a number of, it seems likely that the decoder-only transformer is here to remain - a minimum of for probably the most half. Distilled Models: Smaller, effective-tuned versions based on Qwen and Llama architectures. DeepSeek-R1 achieves state-of-the-artwork ends in numerous benchmarks and affords both its base fashions and distilled variations for community use. Comprehensive evaluations reveal that DeepSeek-V3 outperforms different open-supply fashions and achieves performance comparable to main closed-supply fashions. DeepSeek-V3 achieves the perfect performance on most benchmarks, especially on math and code tasks. Huawei Ascend NPU: Supports working DeepSeek-V3 on Huawei Ascend units. DeepSeek-V3 collection (together with Base and Chat) supports commercial use. The DeepSeek Chat V3 model has a prime rating on aider’s code modifying benchmark.

In-depth evaluations have been carried out on the base and chat fashions, evaluating them to present benchmarks. In concept, this could even have useful regularizing effects on coaching, and DeepSeek studies finding such effects of their technical reports. Even Chinese AI specialists think expertise is the first bottleneck in catching up. The mannequin could generate answers that could be inaccurate, omit key info, or include irrelevant or redundant text producing socially unacceptable or undesirable textual content, even when the prompt itself doesn't embrace something explicitly offensive. AMD GPU: DeepSeek v3 Enables working the DeepSeek-V3 model on AMD GPUs via SGLang in each BF16 and FP8 modes. Notably, SGLang v0.4.1 absolutely helps working DeepSeek-V3 on each NVIDIA and AMD GPUs, making it a extremely versatile and strong answer. LLM v0.6.6 helps DeepSeek-V3 inference for FP8 and BF16 modes on both NVIDIA and AMD GPUs. TensorRT-LLM now supports the DeepSeek-V3 model, offering precision options similar to BF16 and INT4/INT8 weight-only. DeepSeek-V3 stands as one of the best-performing open-source mannequin, and also exhibits aggressive efficiency in opposition to frontier closed-source models.

In-depth evaluations have been carried out on the base and chat fashions, evaluating them to present benchmarks. In concept, this could even have useful regularizing effects on coaching, and DeepSeek studies finding such effects of their technical reports. Even Chinese AI specialists think expertise is the first bottleneck in catching up. The mannequin could generate answers that could be inaccurate, omit key info, or include irrelevant or redundant text producing socially unacceptable or undesirable textual content, even when the prompt itself doesn't embrace something explicitly offensive. AMD GPU: DeepSeek v3 Enables working the DeepSeek-V3 model on AMD GPUs via SGLang in each BF16 and FP8 modes. Notably, SGLang v0.4.1 absolutely helps working DeepSeek-V3 on each NVIDIA and AMD GPUs, making it a extremely versatile and strong answer. LLM v0.6.6 helps DeepSeek-V3 inference for FP8 and BF16 modes on both NVIDIA and AMD GPUs. TensorRT-LLM now supports the DeepSeek-V3 model, offering precision options similar to BF16 and INT4/INT8 weight-only. DeepSeek-V3 stands as one of the best-performing open-source mannequin, and also exhibits aggressive efficiency in opposition to frontier closed-source models.

LLM: Support DeepSeek-V3 mannequin with FP8 and BF16 modes for tensor parallelism and pipeline parallelism. This second, as illustrated in Table 3, occurs in an intermediate model of the model. We present DeepSeek-V3, a powerful Mixture-of-Experts (MoE) language model with 671B complete parameters with 37B activated for each token. The total size of DeepSeek-V3 models on Hugging Face is 685B, which includes 671B of the principle Model weights and 14B of the Multi-Token Prediction (MTP) Module weights. Multi-Token Prediction (MTP) is in improvement, and progress could be tracked in the optimization plan. We investigate a Multi-Token Prediction (MTP) objective and show it beneficial to mannequin performance. SGLang: Fully assist the DeepSeek-V3 model in each BF16 and FP8 inference modes, with Multi-Token Prediction coming soon. Meanwhile, we also maintain a management over the output fashion and length of DeepSeek-V3. Our pipeline elegantly incorporates the verification and reflection patterns of R1 into DeepSeek-V3 and notably improves its reasoning efficiency. On January 20th, a Chinese firm named DeepSeek launched a new reasoning mannequin known as R1. In case you are searching for where to buy DeepSeek, because of this present DeepSeek named cryptocurrency on market is likely inspired, not owned, by the AI firm.

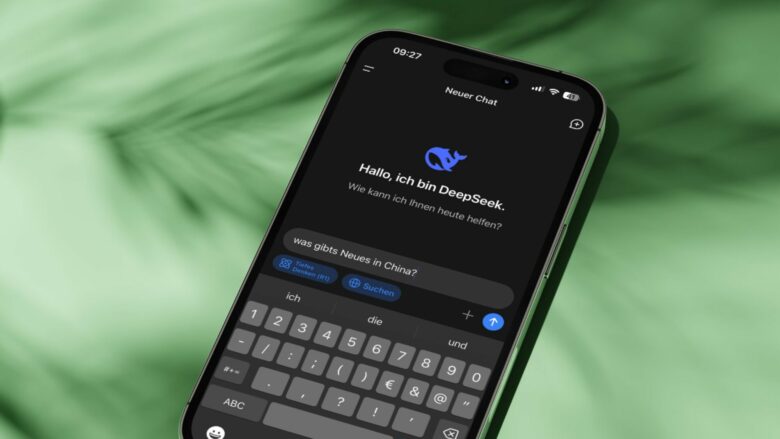

All fashions are evaluated in a configuration that limits the output length to 8K. Benchmarks containing fewer than 1000 samples are examined multiple occasions using varying temperature settings to derive robust ultimate outcomes. Our evaluation outcomes exhibit that DeepSeek LLM 67B surpasses LLaMA-2 70B on varied benchmarks, significantly within the domains of code, arithmetic, and reasoning. I will consider including 32g as properly if there may be interest, and once I have finished perplexity and analysis comparisons, but right now 32g models are nonetheless not absolutely tested with AutoAWQ and vLLM. Some are referring to the DeepSeek Ai Chat launch as a Sputnik second for AI in America. Within two weeks of the discharge of its first free chatbot app, the mobile app skyrocketed to the top of the app retailer charts in the United States. The reality of the matter is that the overwhelming majority of your changes occur on the configuration and root stage of the app. They are simply very gifted engineers and show why China is a serious competitor to the US.

When you loved this post and also you want to receive more info about DeepSeek v3 generously go to our webpage.

댓글목록

등록된 댓글이 없습니다.